AI: thoughts on the current state of play

28 June, 2024 Reading: 4:42 mins

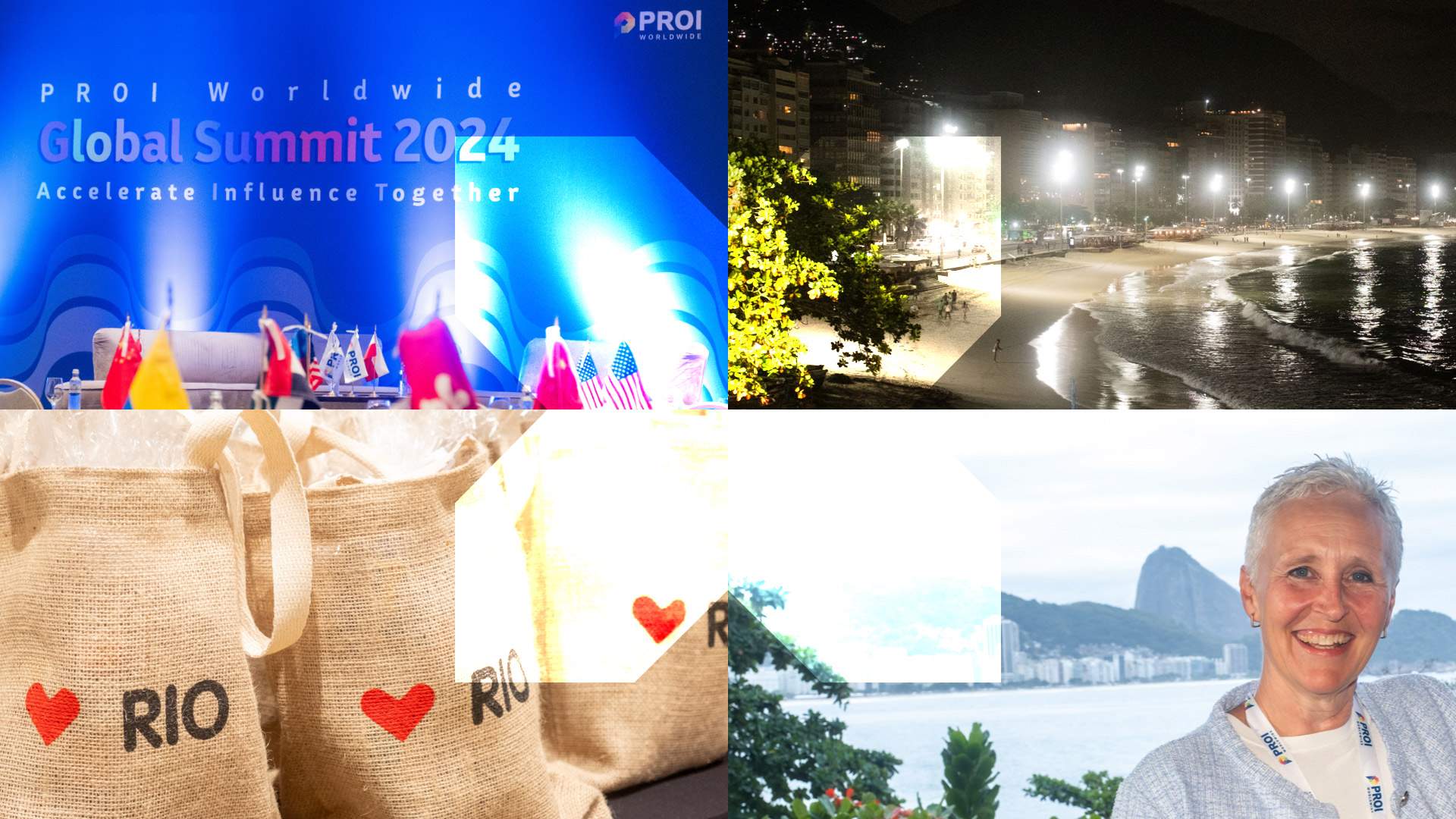

At the PROI Worldwide Global Summit in Rio last month, AI was mentioned in every session. I heard and saw exciting things, and the lightning speed of it all means huge and exciting opportunities for agencies and marketers.

I’m not going to speculate about ‘what’s next‘ in AI, there are plenty of others doing that. Internally at KISS, we’re still forming the guidelines as a management team ourselves – we’ve set up an ‘AI working group’ exploring the prospects and the challenges we need to navigate, and it’s becoming a top priority to understand how to strike the balance between embracing the opportunities and mitigating the threats.

We also don’t know what our vision is, how AI fits our business; right now, I think very few Boards do, but it’s an active topic for us.

So, for what it’s worth, here are my thoughts.

1. We all need to get good at using it

There’s no doubt that today’s AI tools are already superb at the right kind of repetitive, data-heavy tasks, from summarising a white paper, drafting minutes and emails to deep research and live campaign-data analysis, so we should all be doing that today. We should be looking seriously at how AI can, for example, help build a better customer experience or tighten up targeting of prospects. It will still take practice for all of us, and we should expect errors, but overall, it’s worthwhile.

2. We need to beware of the Type II error

Wharton’s Ethan Mollick describes how the internet has trained us to expect ‘Type I errors’ – the tool just doesn’t produce a good answer. But we all need to beware of what he calls the ‘Type II error’ where the tool subtly makes facts up or confidently says the wrong thing. This could catch any of us out, but perhaps more so the more junior staff who haven’t got as much industry or life-experience to spot a convincing fake (although my 15-year-old spots fake videos quicker than I do…).

For example, Fast Company recently asked some top AI tools about the practicalities of voting in the US elections, which threw up some answers that were convincing but also significant gaps and errors, some illegal. Biases are also a big issue: some experts claim good AI tools ‘try too hard to please’ and more disturbingly, most have shown bias and errors, for example around African topics and in languages other than English. One factor in biased output is of course bias in the source data it starts with.

Other tools such as Perplexity, Claude and Gemini are as guilty of this as Chat, but all good tools can also be challenged to both prove their claims, and to rework content in different styles.

3. We need to spend time with our AI

I agree with the experts who say we need to spend time teaching AI tools what we like and improving their draft outputs: perhaps like a smart intern who brings great energy, core skills and intellect to the table but needs a fair bit of input and direction. New York Times’ Ezra Klein suggests prompting it with examples of output you like, and another well-known technique is ‘chain of thought’ – working step by step through a question rather than going in cold with a single long and complex question. The good news is, together you can all end up learning, be highly productive, and look good to your stakeholders!

4. We should stop worrying about the froth

From deepfake US presidents to fears of machine-led Armageddon, it’s easy to get caught up in the hysteria around AI’s misuse. Yes, Chat now has ‘Rizz GPT’ to help you look better on dating apps, and it may even be true that Replika’s AI-companion software had to be ‘lobotomised’ due to over use for ‘erotic purposes’ … but I feel we shouldn’t let froth like this distract us from the major opportunity here.

Yes, the dizzying speed of change sometimes even worries AI industry experts. Yes, there is a ‘jagged edge’ to innovations in AI. Right now it’s great at some tasks and not others… and as one PROI presenter put it, the ‘hype cycle’ is in full swing: right now, AI is currently getting too much attention (and funding), it’ll soon be followed by a plunge into the ‘trough of disillusion’. BUT I know that will soon level out into the ‘plateau of productivity’.

5. We should get in early with AI training and ‘guide rails’

We need to invest in AI tool training for all hands, as AI capabilities are doing some lower-level tasks already. People need to get comfortable with that and upskill to more value-adding tasks like carefully analysing output or ensuring source data is as clean and as unbiased as possible.

AI developers talk about ‘guide rails’ for their algorithms, to keep them out of no-go areas and on the right side of moral and ethical issues. As leaders we need to get in early with these ‘guide rails’ for our people in using AI internally, with customers and other external stakeholders.

Of course, training and education are a top priority – we recently hosted an AI training event at KISS HQ, joined by our colleagues from PROI agency Mojo. We tested out different ways of using AI tools, and it was a power-learning packed day!

What are your thoughts? If you’d like to join the conversation, get in touch…